Enterprises accumulate vast amounts of unstructured content—policy manuals, contract documents, training videos, operational procedures, scanned files, and meeting transcripts—most of which remain dormant, disconnected from business decision-making. Finding precise information across this content landscape is tedious, slow, and often ineffective.

This architecture introduces a modern solution: an AI-powered knowledge platform on Azure, designed to transform unstructured content into actionable intelligence, accessible through a RAG-based (Retrieval-Augmented Generation)conversational chat experience. By combining semantic search, document extraction, and generative AI, the solution enables employees to query corporate content naturally—“as if speaking to an expert.”

Whether it’s helping a legal team verify contract clauses, enabling HR to summarize policy documents, or assisting field engineers in retrieving standard operating procedures, this pattern serves as a robust foundation for intelligent, secure, and scalable enterprise knowledge access.

You can choose this solution if…

This architecture is particularly suited for organizations facing challenges around fragmented documentation, knowledge retention, or time-consuming information retrieval. Common scenarios include:

- Contract intelligence: Legal or procurement teams need to query thousands of contracts and extract obligations, terms, or expiration clauses.

- HR and compliance: Employees require quick answers to policy questions, without browsing dozens of PDF handbooks.

- Operations and field service: Frontline teams need instant access to procedures, checklists, or maintenance logs from mobile devices.

- Training and enablement: Organizations want to summarize lengthy training videos or PDF manuals into actionable bullet points or Q&A formats.

- Customer support: Internal agents need real-time guidance from knowledge bases and product documentation.

- Auditing and internal control: Regulatory teams must quickly retrieve references from archived meeting minutes, scanned reports, or compliance logs.

If your enterprise deals with large volumes of unstructured or semi-structured content, and you seek to unlock its value via AI, this pattern provides a secure and scalable model.

Architecture Overview

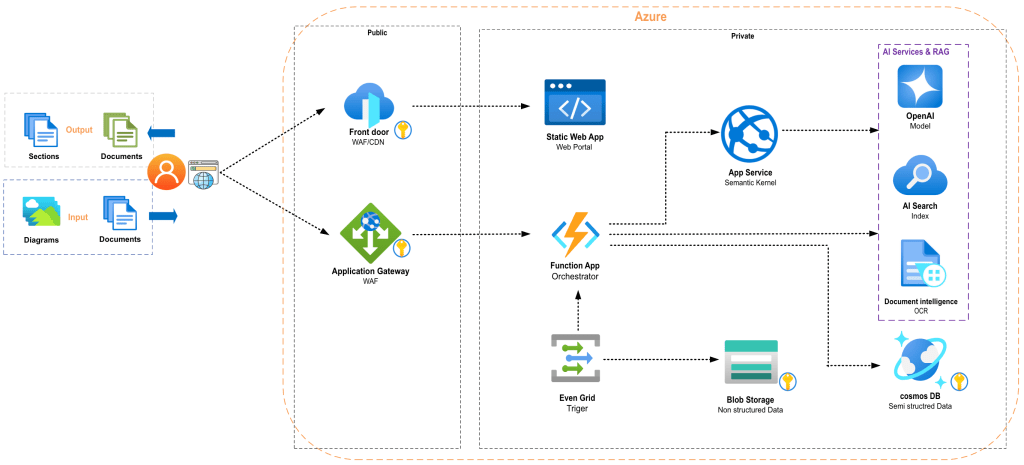

The solution is built as a modular, event-driven architecture, where users can upload documents and receive AI-generated responses grounded in verified internal content. It combines multiple Azure services to process, enrich, store, and serve content securely and intelligently.

Users interact with a Static Web App to upload documents or folders. Upon upload, files are stored in Azure Blob Storage, which emits an event to Event Grid. This triggers an Azure Function App that orchestrates the ingestion pipeline.

The Function invokes Azure Document Intelligence to extract structured text from PDFs, Word files, or scans. The content is then split into semantic “chunks,” embedded via Azure OpenAI, and stored in Azure AI Search, which holds both vector and semantic indexes for fast and relevant retrieval.

A backend API hosted on Azure App Service, powered by Semantic Kernel, receives user questions, performs secure semantic search, and formulates a prompt enriched with retrieved content. The final response is generated using Azure OpenAI’s GPT models, returning a grounded, conversational answer.

All metadata, lineage, and feedback (user reactions, prompt quality, source traceability) are stored in Azure Cosmos DBfor governance and analytics.

Private endpoints, Managed Identity, and Key Vault ensure the platform is secure by design. The entire flow is observable, traceable, and scalable across multiple regions.

Roles and Components

Each Azure component in this pattern plays a specific role in orchestrating document ingestion, AI enrichment, search, and conversational output:

| Component | Role |

| Static Web App | Provides the user interface for uploading files, initiating chat queries, and visualizing document history. |

| Azure Blob Storage | Stores raw uploaded content (PDFs, Word, scanned documents, transcripts, etc.) in secure, structured containers. |

| Azure Event Grid | Detects file uploads and triggers the ingestion process asynchronously. |

| Azure Function App | Orchestrates the ingestion pipeline: calling extraction, chunking content, generating embeddings, and storing vectors. |

| Azure Document Intelligence | Extracts structured content (text, tables, key-value pairs) from uploaded documents using OCR and layout analysis. |

| Azure OpenAI (Embeddings) | Generates vector representations of content chunks for similarity-based retrieval. |

| Azure AI Search | Stores both semantic and vector indexes; supports hybrid retrieval for RAG. |

| App Service + Semantic Kernel | Hosts the backend API and orchestrates semantic search, access control, and GPT-based chat reasoning. |

| Azure OpenAI (GPT) | Generates grounded answers by completing prompts with context retrieved from internal indexed data. |

| Azure Cosmos DB | Stores ingestion metadata, document lineage, prompt feedback, and chat history for traceability and improvement. |

| Azure Key Vault | Secures credentials, embedding keys, and service configurations. |

Security

Security is embedded at every stage of the pipeline—ensuring confidentiality, integrity, and controlled access to both documents and AI output.

All sensitive services (Blob Storage, Cosmos DB, AI Search, Key Vault) are accessed through Private Endpoints, ensuring that data remains within the Azure backbone network. Function App and App Service are integrated into the VNet, with outbound traffic managed by firewall policies and private DNS resolution.

To eliminate the use of credentials, Managed Identity is adopted across the platform, allowing services to authenticate securely without secrets.

The API layer implements RBAC-before-RAG, meaning that before any AI retrieval or generation occurs, the system filters documents based on the user’s identity and access rights. This is critical for organizations where different users or departments should not see the same documents, even if stored in the same index.

Finally, to protect end users from hallucinated or unsafe responses, Azure Content Safety can be used to evaluate GPT output and filter it against predefined policies.

Availability and Scalability

The architecture is designed to be resilient, elastic, and regionally scalable, supporting variable ingestion volumes and concurrent user queries.

The ingestion pipeline is event-driven—triggered by Blob Storage events and processed by stateless Function Apps. This allows the platform to automatically scale ingestion as new files arrive, without needing to provision long-running compute.

App Service hosting the Semantic Kernel backend can autoscale based on concurrent user sessions. Azure AI Searchsupports replica and partition-based scaling, which can be tailored depending on the query load or document volume.

For critical workloads or global deployment, Cosmos DB and AI Search can be configured in multi-region mode, ensuring low-latency query performance and failover capabilities.

Because the ingestion flow and query/chat path are decoupled, a spike in uploads will not degrade the responsiveness of AI chat queries.

Network and Connectivity

The solution is built with a secure network topology to protect content, enforce segmentation, and avoid public exposure of core services.

Both App Service and Function App are integrated with a dedicated VNet, allowing them to resolve and connect to Private Endpoints of Blob Storage, Cosmos DB, AI Search, and Key Vault using private DNS zones.

Outbound traffic from these components to public Azure services (like Azure OpenAI) is restricted through NAT gateways or Azure Firewall, ensuring only whitelisted communication occurs.

For external API exposure (e.g., connecting to Microsoft Teams or custom applications), Azure API Management or Front Door can be layered on top, providing routing, throttling, and caching controls.

In future extensions, Azure Private Link with Private DNS override could be added to further isolate traffic and integrate with hybrid or on-premise networks.

Observability

Operational transparency is essential in AI systems. This architecture integrates end-to-end observability across ingestion, AI, and user experience layers.

- Application Insights is enabled for the App Service and Function App to track requests, failures, latency, and dependencies.

- Custom metrics are published to Azure Monitor to capture:

- Embedding usage and token consumption

- Retrieval performance (hit@k)

- GPT response latency and success rates

- Cosmos DB logs ingestion status, document lineage (source file → chunk → vector → query hit), and user feedback.

- A dashboard can be built using Workbooks or Grafana to visualize ingestion activity, model health, prompt efficiency, and operational KPIs.

This observability layer allows teams to monitor model behavior, audit document usage, and continuously improve prompt quality or retrieval strategy.

Patterns and AI Techniques Used

The platform integrates multiple Azure-native AI and architecture patterns:

- Retrieval-Augmented Generation (RAG): Combines search with generative models to ground responses in enterprise content.

- Event-driven ingestion: Decouples compute from input flow, enabling scalable file handling.

- RBAC-before-RAG: Ensures secure filtering of indexed content before AI answers are generated.

- Chunking and Embeddings: Splits documents into meaning-preserving chunks, mapped into vector space for retrieval.

- Hybrid search ranking: Merges vector similarity with keyword and semantic scoring in Azure AI Search.

- Semantic Kernel orchestration: Abstracts prompt templates, memory, and AI execution logic for maintainability.

- Prompt feedback loop: Captures user feedback to reinforce or retrain prompt grounding strategies over time

his intelligent knowledge platform on Azure is a modern foundation for enterprise AI enablement. It accelerates information access, improves decision-making, and unlocks the latent value of unstructured content—securely, at scale, and with human-like interaction.

If you’re exploring how to adapt this solution to your industry—whether in legal, finance, HR, operations, or compliance—feel free to reach out or leave a comment. Future patterns will cover advanced prompt engineering, user feedback optimization, and compliance-oriented deployments.

Laisser un commentaire